Using VOICEVOX CORE with Python (Text to Speech)

Introduction

In a previous article, I tried speech synthesis and speech recognition using Google Cloud Platform, but frequent use can become costly. Since I wanted to run everything for free, this time I tried using VOICEVOX.

It is described as “a free and moderately high-quality text-to-speech software,” but I personally think it's high-quality enough and an excellent piece of software. Implementing a speech synthesis engine from scratch would be a lot of work...

I ran into several errors while using it, so I’ve written down how I resolved them. Depending on future updates, the information may change, but I hope it will be useful.

▼VOICEVOX official page

https://voicevox.hiroshiba.jp/

▼VOICEVOX software terms of use

https://voicevox.hiroshiba.jp/term/

▼Previous related article

Overview of VOICEVOX

▼If you just want to try it out easily, download it from here. It comes as a desktop application. It seems to use Electron as well.

https://voicevox.hiroshiba.jp/

▼Here is the overall architecture of VOICEVOX. This time, we will use the core component.

https://github.com/VOICEVOX/voicevox/blob/main/docs/%E5%85%A8%E4%BD%93%E6%A7%8B%E6%88%90.md

▼GitHub repository for the core component

https://github.com/VOICEVOX/voicevox_core

Running with Python

Setting up the environment

▼Python environment setup instructions are written here.

https://github.com/VOICEVOX/voicevox_core/blob/main/example/python/README.md

This time, I am running it on Windows using VS Code, through a PowerShell terminal. My Python version is 3.10.11.

▼I am using version 0.14.5 of voicevox_core.

https://github.com/VOICEVOX/voicevox_core/releases/tag/0.14.5

First, download download.exe into the voicevox folder using the following commands, and run it. The required libraries will be installed.

mkdir voicevox

cd voicevox

Invoke-WebRequest https://github.com/VOICEVOX/voicevox_core/releases/latest/download/download-windows-x64.exe -OutFile ./download.exe

.\download.exe

Install the Python libraries using pip.

pip install https://github.com/VOICEVOX/voicevox_core/releases/download/0.14.5/voicevox_core-0.14.5+cpu-cp38-abi3-win_amd64.whl

▼The folder structure looks like this.

voicevox

├─ voicevox_core

└─ download.exe

Running the program

I tried running the sample program from the voicevox_core repository, but I encountered errors and was unable to run it.

▼Here is the sample program I tried.

https://github.com/VOICEVOX/voicevox_core/tree/main/example/python

First, I got the following error. I had installed the 64-bit versions of both Python and VOICEVOX.

ImportError: DLL load failed while importing _rust: %1 は有効な Win32 アプリケーションではありません。

This means that loading the DLL file failed. You can fix this by placing onnxruntime.dll, which is included in the voicevox_core folder you downloaded earlier, in the correct location.

I was able to avoid the error in two ways:

- Run the program in the directory where

onnxruntime.dllis located - Copy

onnxruntime.dllinto thevoicevox_corefolder installed via pip

Next, I also encountered an error saying that voicevox_core.blocking does not exist. This may be resolved in a future update.

About voicevox_core.blocking

The current Python binding of voicevox_core includes a blocking.py.

▼Here it is.

https://github.com/VOICEVOX/voicevox_core/tree/main/crates/voicevox_core_python_api

I was using version 0.14.5, which was the latest release at the time, but it looks like version 0.15.0 is likely to be released. When that happens, it may include...

In the following article, there was a program that did not use voicevox_core.blocking, and this version was able to run successfully.

▼Here is the article.

https://qiita.com/taka7n/items/1dc61e507274b93ee868

You can specify the speaker by ID, and the following program displays a list of available speakers.

from voicevox_core import METAS

from pprint import pprint

pprint(METAS)Save the program in the previously created voicevox_core folder and run it.

from pathlib import Path

from voicevox_core import VoicevoxCore, METAS

import sys, os

core = VoicevoxCore(open_jtalk_dict_dir=Path("./open_jtalk_dic_utf_8-1.11"))

speaker_id = 2

text = sys.argv[1]

if not core.is_model_loaded(speaker_id):

core.load_model(speaker_id)

wave_bytes = core.tts(text, speaker_id)

with open("./" + text + ".wav", "wb") as f:

f.write(wave_bytes)Since the ID is 2, the voice used is the normal voice of “Shikoku Metan.”

Since the program converts command-line arguments into speech, run it like this:

python .\voicevox.py こんにちは

▼If you encounter the following error, check the path to the open_jtalk folder.

voicevox_core.VoicevoxError: OpenJTalkの辞書が読み込まれていません

A file with the name text + .wav will be created.

▼Here is an example of the generated audio.

Conclusion

Setting up the environment is always the most challenging part, but now it is ready to run. Future updates will be important to keep track of.

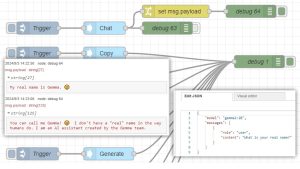

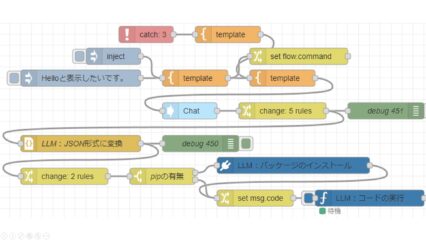

Being able to run this in Python means that it can be integrated into applications, executed from Node-RED, and embedded in robots. This opens up many possibilities. The next thing I would like is voice recognition functionality.