Trying Out NVIDIA Isaac Lab Part 2 (Running Reinforcement Learning Samples, Cartpole, Franka)

Introduction

In this post, I tried out two reinforcement learning samples in Isaac Lab.

I have experimented with reinforcement learning using Gymnasium and other tools in the past, but Isaac Lab is interesting because it performs training by placing multiple instances within a 3D space. Although the processing is heavy on my laptop, I tried it with visualization enabled, at least for the initial run.

▼I am using a gaming laptop purchased for around 100,000 yen, running Windows 11.

▼The Isaac Lab execution environment was set up as described in the following article.

▼Previous articles are here:

Running the Cartpole Sample

First, I tried the inverted pendulum (Cartpole) sample, which I assumed would be relatively lightweight in terms of processing.

▼The following page provides a list of models and environments available in Isaac Lab, which includes the Cartpole model.

https://isaac-sim.github.io/IsaacLab/main/source/overview/environments.html

▼An overview can be found on this page:

▼The page regarding the execution of training is here:

▼I have previously performed an inverted pendulum simulation with Gymnasium, but in Isaac Lab, it appears as a 3D model.

I ran train.py with the following command:

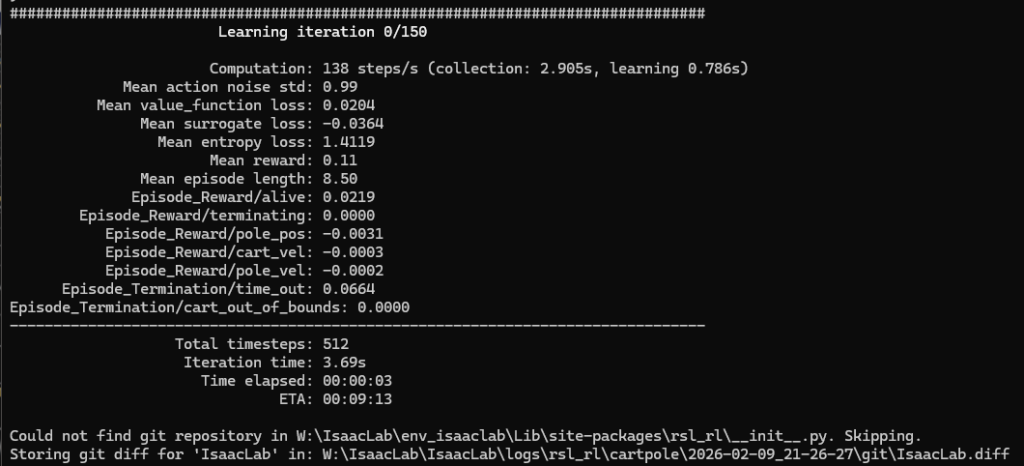

python scripts/reinforcement_learning/rsl_rl/train.py --task Isaac-Cartpole-v0 --num_envs 32▼Training started.

▼The state during training is here:

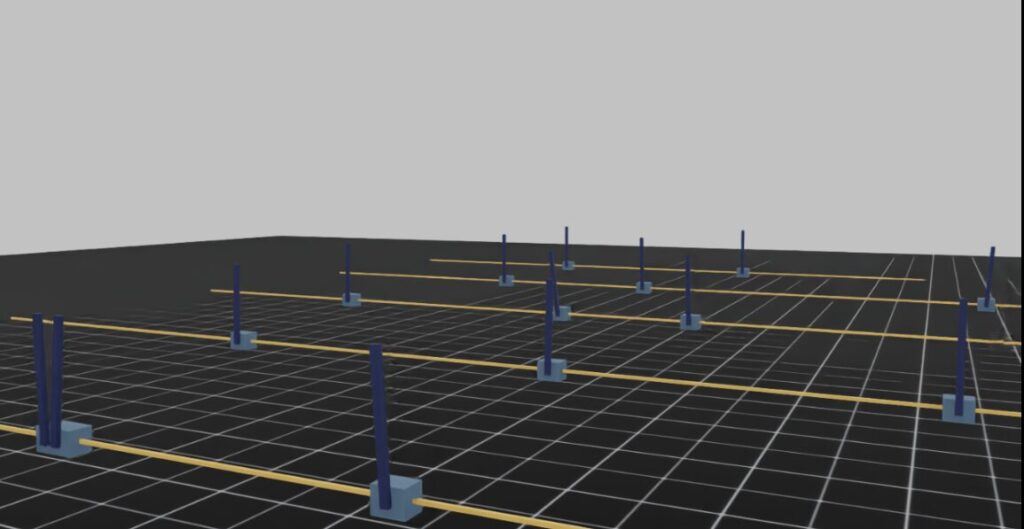

Inverted pendulums were placed in the simulation, and training began. In the 6-minute video, I could see the number of upright pendulums change between the beginning and the end.

▼Perhaps due to the progress of training, the number of pendulums standing upright increased in the latter half.

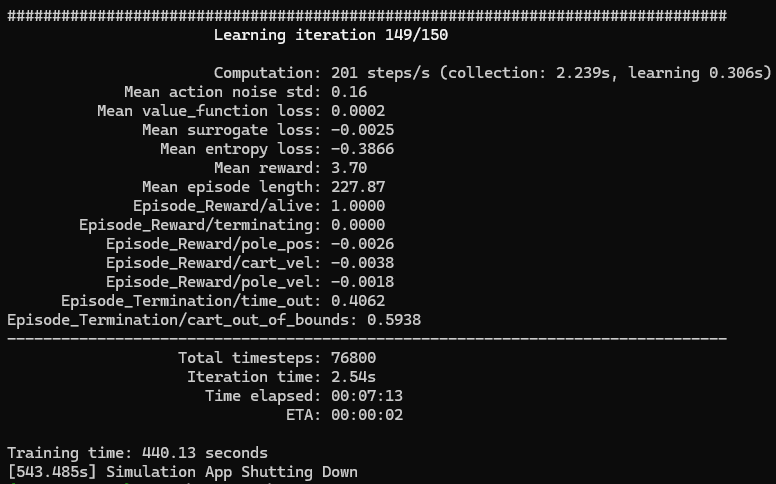

▼The final training results are here:

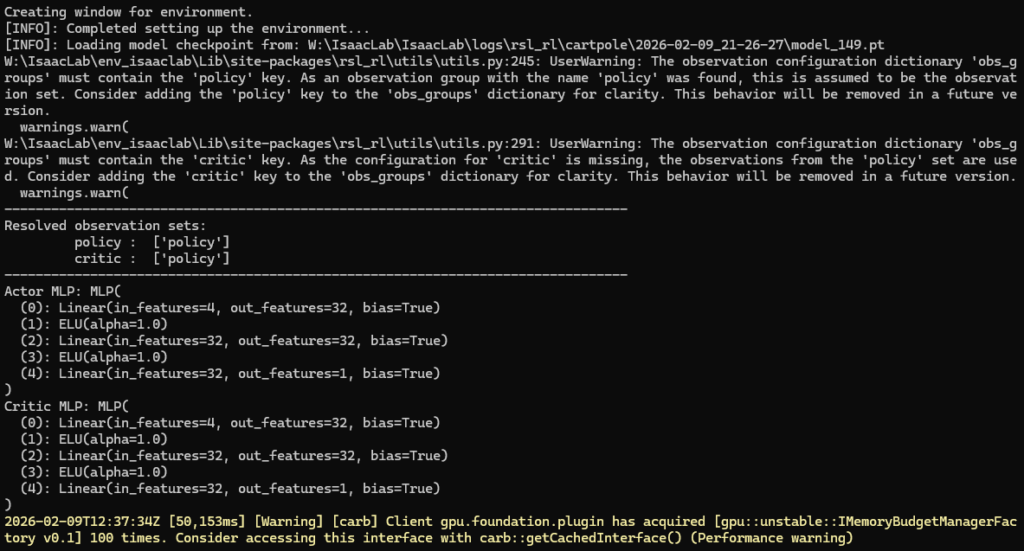

Based on the model after training, I ran play.py.

python scripts/reinforcement_learning/rsl_rl/play.py --task Isaac-Cartpole-v0 --num_envs 1▼It launched without any problems.

▼The movement looks like this:

While it occasionally drifted sideways, it was able to maintain an upright state.

Running the Franka Sample

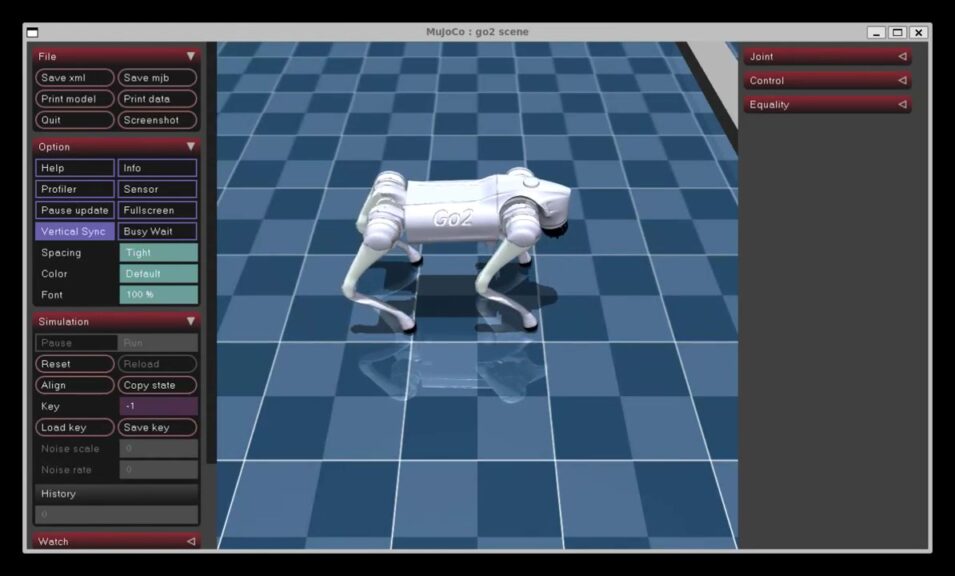

Next, I executed reinforcement learning for a 7-axis robot arm. The computational load is likely higher than that of the Cartpole earlier.

I ran train.py with the following command:

python scripts/reinforcement_learning/rsl_rl/train.py --task Isaac-Reach-Franka-v0 --num_envs 32▼The state during training is here. It is moving wriggly until it reaches the target coordinates.

▼With the realistic shadows, it looks like an experimental facility inside a factory.

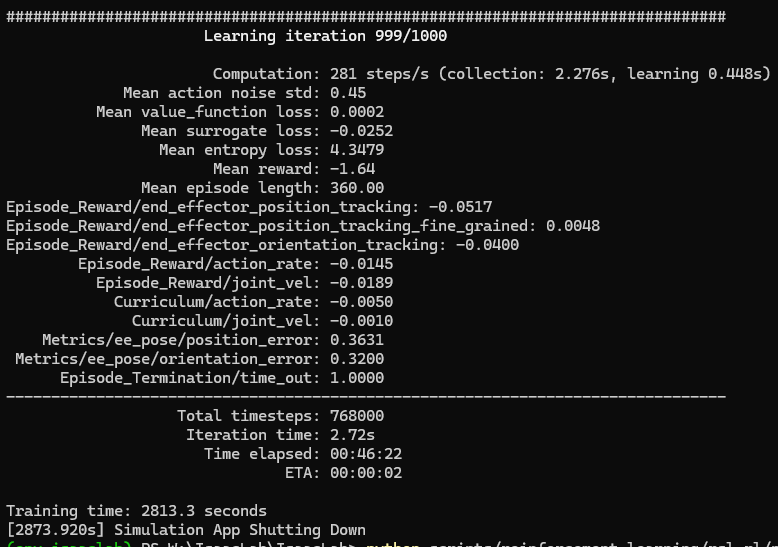

The training finished after about 46 minutes.

▼The final training results are here:

I ran play.py with the trained model.

python scripts/reinforcement_learning/rsl_rl/play.py --task Isaac-Reach-Franka-v0 --num_envs 1▼The movement looks like this:

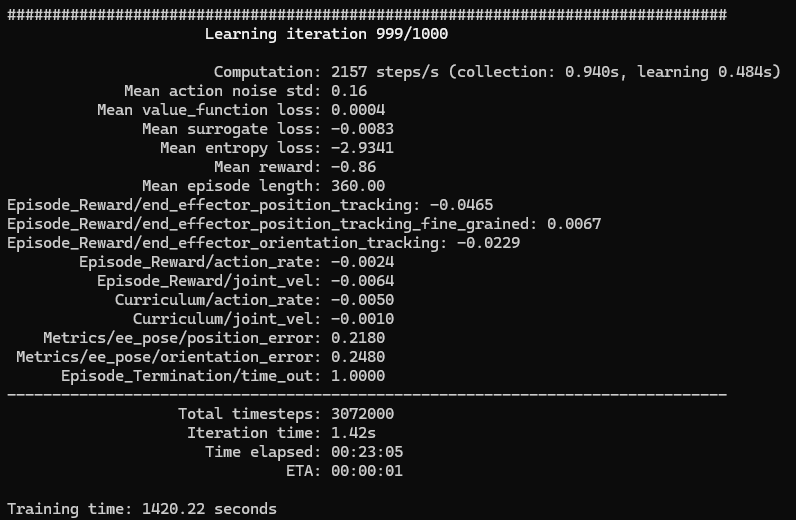

It didn't look like it was getting very close to the target position, so I tried increasing the number of environments from 32 to 128 in headless mode.

python scripts/reinforcement_learning/rsl_rl/train.py --task Isaac-Reach-Franka-v0 --num_envs 128 --headless▼The final training results are here:

I ran play.py again.

▼The movement looks like this:

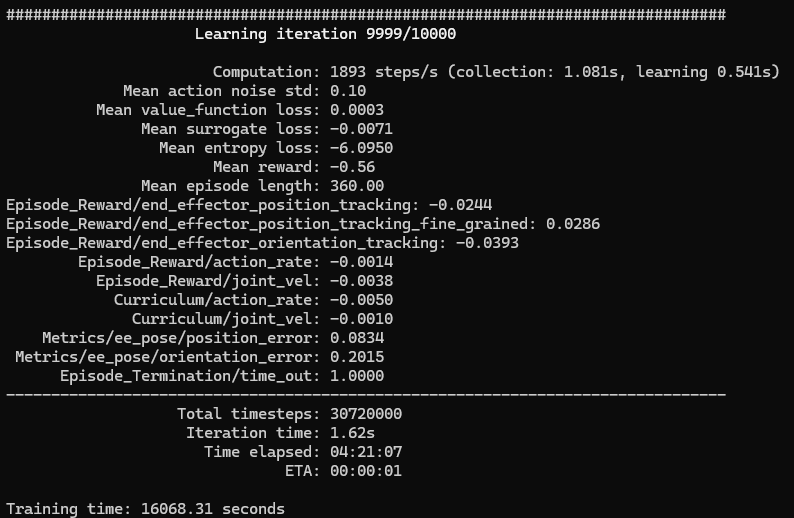

Since this also didn't seem to reach the target coordinates very well, I increased the Iterations tenfold to 10,000.

python scripts/reinforcement_learning/rsl_rl/train.py --task Isaac-Reach-Franka-v0 --num_envs 128 --headless --max_iterations 10000▼The Iterations reached 10,000. The final training results are here:

▼It seemed to reach the target coordinates better than before.

Whether it depends on the training conditions, it gets closer when it's in front of the robot or near the target coordinates, but when it's in a lateral direction or far away, it didn't seem to get very close, ending with only the rotation of the hand. It might be necessary to increase the number of training iterations even further.

Finally

I tried the samples for the control of an inverted pendulum and a 7-axis robot arm, and I felt that the required amount of training indeed differs depending on the conditions. The robot arms I handle in my research are 4-axis or 6-axis rather than 7-axis, so I'm curious if reinforcement learning can provide solutions in those cases as well. I hope to achieve control while avoiding obstacles.

▼Among the environments available in Isaac Lab, there was also assembly of parts. I am curious if I can simulate the movements of the agricultural robots I am working on in my research.

https://isaac-sim.github.io/IsaacLab/main/source/overview/environments.html